Brief Review — Learning to Prompt for Vision-Language Models

Context Optimization (CoOp), Improves CLIP

Learning to Prompt for Vision-Language Models

Context Optimization (CoOp), by Nanyang Technological University,

2022 IJCV, Over 1300 Citations (Sik-Ho Tsang @ Medium)Visual/Vision/Video Language Model (VLM)

2017 … 2023 [GPT-4] [GPT-4V(ision)] [MultiModal-CoT] [CoCa] [Florence-2] [PaLI]

==== My Other Paper Readings Are Also Over Here ====

- Context Optimization (CoOp) is proposed, which is a simple approach specifically for adapting CLIP-like vision-language models for downstream image recognition. Concretely, CoOp models a prompt’s context words with learnable vectors while the entire pre-trained parameters are kept fixed.

- To handle different image recognition tasks, two implementations of CoOp are provided: unified context (UC) and class-specific context (CSC).

Outline

- Context Optimization (CoOp)

- Results

1. Context Optimization (CoOp)

1.1. Prior Art CLIP

- Formally, let f be image features extracted by the image encoder for an image x and {wi} where i is from 1 to K, which is a set of weight vectors generated by the text encoder. K denotes the number of classes and each wi is derived from a prompt that could have the form of “a photo of a [CLASS].” where the class token is replaced by the specific class name, such as “cat,” “dog” or “car.”

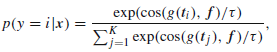

- The prediction probability is then computed as:

- CLIP maximizes the cosine similarity for matched pairs while minimizes the cosine similarity for all other unmatched pairs.

- To learn diverse visual concepts that are more transferable to downstream tasks, CLIP’s team collects a large training dataset consisting of 400 million image-text pairs.

1.2. Context Optimization (CoOp)

Context Optimization (CoOp), which avoids manual prompt tuning by modeling context words with continuous vectors that are end-to-end learned from data while the massive pre-trained parameters are frozen.

1.2.1. Unified Context (UC)

- The unified context version shares the same context with all classes. Specifically, the prompt given to the text encoder g(·) is designed with the following form:

- where each [V]m (m∈{1, . . . , M}) is a vector with the same dimension as word embeddings. M is the number of context tokens.

- By forwarding a prompt t to the text encoder g(·), the prediction probability is computed as:

- where the class token within each prompt ti is replaced by the corresponding word embedding vector(s) of the i-th class name.

- Class token can be also put it in the middle:

- which increases flexibility for learning.

1.2.2. Class-Specific Context (CSC)

- Another option is to design class-specific context (CSC) where context vectors are independent to each class.

- It is found that CSC is particularly useful for some fine-grained classification tasks.

1.3. Training

- Training is performed to minimize the standard classification loss based on the cross-entropy, and the gradients can be back-propagated all the way through the text encoder g(·), making use of the rich knowledge encoded in the parameters to optimize the context.

2. Results

From the average performance displayed in the top-left corner, it is observed that CLIP+CoOp is a strong few-shot learner, requiring only two shots on average to obtain a decent margin over zero-shot CLIP.

- Given 16-shots for training, the average gap brought by CoOp can be further increased to around 15%.

- The source dataset is ImageNet. The target datasets are ImageNetV2, ImageNet-Sketch, ImageNet-A and ImageNet-R.

It is surprising that CoOp enhances CLIP’s robustness to distribution shifts, despite the exposure to the source dataset. This suggests that the learned prompts are also generalizable.