Review — Instance Normalization: The Missing Ingredient for Fast Stylization

Instance Norm (IN), for Neural Style Transfer

Instance Normalization: The Missing Ingredient for Fast Stylization

2016 arXiv v3, Over 3400 Citations

Improved Texture Networks: Maximizing Quality and Diversity in Feed-forward Stylization and Texture Synthesis

2017 CVPR, Over 780 Citations

StyleNet, Instance Norm (IN), by Computer Vision Group, Skoltech & Yandex; Visual Geometry Group, University of Oxford; Computer Vision Group, Skoltech

(Sik-Ho Tsang @ Medium)Neural Style Transfer

2016 [Artistic Style Transfer] [Image Style Transfer] [Perceptual Loss] [GAN-CLS, GAN-INT, GAN-CLS-INT]

==== My Other Paper Readings Are Also Over Here ====

- Fast stylization method, Texture Network, is revisited. By replacing batch normalization with instance normalization, which is a small change in the stylization architecture, results in a significant qualitative improvement in the generated images.

- 2016 arXiv article is the same as 2017 CVPR one.

- (Instance Normalization is still used by many papers today. My aim is to study Instance Normalization by reading these 2 papers.)

Outline

- Instance Normalization, Instance Norm (IN)

- Results

1. Instance Normalization, Instance Norm (IN)

1.1. Contrast Normalization

- Let x of size T×C×W×H be an input tensor containing a batch of T images. Let xtijk denote its tijk-th element, where k and j span spatial dimensions, i is the feature channel (color channel if the input is an RGB image), and t is the index of the image in the batch.

It is found that the contrast of the stylized image is similar to the contrast of the style image. Thus, the generator network should discard contrast information in the content image.

- A simple version of contrast normalization is given by:

1.2. Batch Normalization

- However, texture network uses the conventional batch normalization, which applies the normalization to a whole batch of images instead for single ones:

1.3. Proposed Instance Normalization

- Instance normalization is proposed to be used everywhere in the generator network to replace batch normalization:

- The instance normalization layer is applied at test time as well.

- In 2017 CVPR paper, the network using instance normalization, is named as StyleNet.

2. Results

2.1. Examples (2016 arXiv)

2.2. SOTA Comparisons (2016 arXiv)

2.3. Different Resolutions (2016 arXiv)

2.4. Further Results (2017 CVPR)

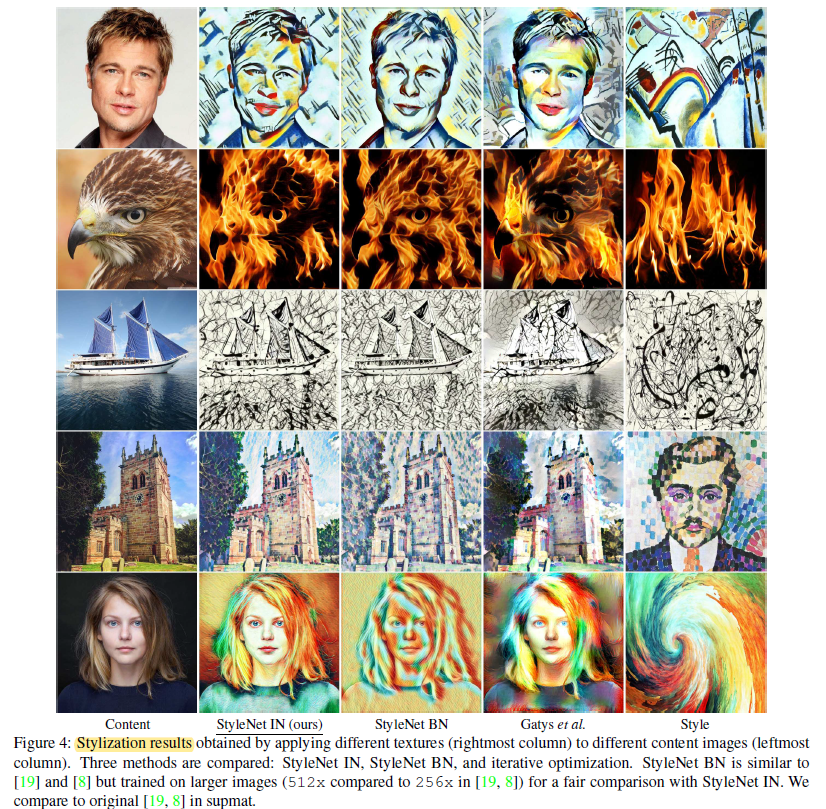

- The proposed network using IN is named StyleNet in 2017 CVPR.

IN variant is far superior to BN and much closer to the results obtained by the much slower iterative method of Image Style Transfer (Gatys et al.).