Review — Neural Machine Translation of Rare Words with Subword Units

Byte Pair Encoding (BPE), Using Subwords for Rare Words

Neural Machine Translation of Rare Words with Subword Units

BPE, by University of Edinburgh

2016 ACL, Over 5400 Citations (Sik-Ho Tsang @ Medium)

Natural Language Processing, NLP, Neural Machine Translation, NMT

- A simple and effective approach is introduced where rare and unknown words are encoded as sequences of subword units.

Outline

- Subword Translation

- Experimental Results

1. Subword Translation

1.1. Intuition Behind

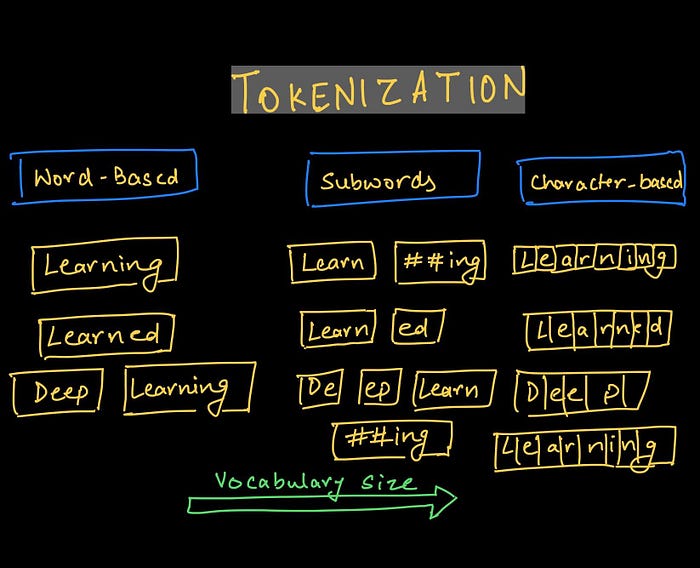

Rare and unknown words can be encoded as sequences of subword units.

- This is based on the intuition that various word classes are translatable via smaller units than words, for instance names, compounds, as well as cognates and loanwords.

- Word categories whose translation is potentially transparent include:

- Named entities:

- Cognates and loanwords:

- Morphologically complex words:

1.2. Byte Pair Encoding (BPE)

- Byte Pair Encoding (BPE) (Gage, 1994) is a simple data compression technique that iteratively replaces the most frequent pair of bytes in a sequence with a single, unused byte.

- Authors adapt this algorithm for word segmentation. Instead of merging frequent pairs of bytes, we merge characters or character sequences.

- The main difference to other compression algorithms, such as Huffman encoding is that, BPE symbol sequences are still interpretable as subword units, and that the network can generalize to translate and produce new words (unseen at training time) on the basis of these subword units.

- In the above example, the Out-Of-Vocabulary (OOV) ‘lower’ would be segmented into ‘low er·’.

2. Experimental Results

- Attention Decoder/RNNSearch is used as the network for NMT using different kinds of vocabularies.

- The proposed BPE-J90k obtains better or competitive BLUE and CHRF3.

The major claim is that translation of rare and unknown words is poor in word-level NMT models, and that subword models improve the translation of these word types.

- The above shows some examples of translation using subwords.

This Byte Pair Encoding (BPE) has been used for many researches.

Reference

[2016 ACL] [BPE]

Neural Machine Translation of Rare Words with Subword Units

Machine Translation

2014 … 2016 … [BPE] … 2019 [AdaNorm] [GPT-2] [Pre-Norm Transformer] [FAIRSEQ] 2020 [Batch Augment, BA] [GPT-3] [T5] [Pre-LN Transformer] [OpenNMT] 2021 [ResMLP] [GPKD]