Brief Review — Llama 2: Open Foundation and Fine-Tuned Chat Models

Llama 2, Open Source and Released

Website: https://ai.meta.com/llama/

Language Model

1991 … 2023 [GPT-4] [LLaMA] [LIMA] [Koala] [BloombergGPT] [GLM-130B] [UL2] [PaLM 2]

==== My Other Paper Readings Are Also Over Here ====Llama 2: Open Foundation and Fine-Tuned Chat Models

Llama 2, by GenAI, Meta, 2023 arXiv v2 (Sik-Ho Tsang @ Medium)

- Llama 2 is developed and released, ranging in scale from 7 billion to 70 billion parameters.

- The fine-tuned LLMs, called Llama 2-Chat, are optimized for dialogue use cases. These Llama 2 models outperform open-source chat models on most benchmarks, and based on human evaluations for helpfulness and safety, may be a suitable substitute for closed-source models.

Outline

- Llama 2: Pretraining

- Llama 2-Chat: Fine-Tuning

1. Llama 2: Pretraining

1.1. Models & Data

- Llama 2 models use the pretraining approach in Llama 1, using an optimized auto-regressive transformer

- Enhanced techniques are used: More robust data cleaning, updated data mixes, trained on 40% more total tokens, doubled the context length, and used grouped-query attention (GQA) to improve inference scalability for the larger models. The primary architectural differences from Llama 1 include increased context length and GQA.

- The training corpus includes a new mix of data from publicly available sources, which does not include data from Meta’s products or services.

- The models are trained using 2 trillion tokens of data as this provides a good performance–cost trade-off, up-sampling the most factual sources in an effort to increase knowledge and dampen hallucinations.

1.2. Results

- Llama 2 models outperform Llama 1 models. In particular, Llama 2 70B improves the results on MMLU and BBH by ≈5 and ≈8 points, respectively, compared to Llama 1 65B. Llama 2 7B and 30B models outperform MPT models of the corresponding size on all categories besides code benchmarks. For the Falcon models, Llama 2 7B and 34B outperform Falcon 7B and 40B models on all categories of benchmarks.

Additionally, Llama 2 70B model outperforms all open-source models.

- Llama 2 70B is close to GPT-3.5 on MMLU and GSM8K, but there is a significant gap on coding benchmarks. Llama 2 70B results are on par or better than PaLM (540B) on almost all benchmarks.

There is still a large gap in performance between Llama 2 70B and GPT-4 and PaLM-2-L.

2. Llama 2-Chat: Fine-Tuning

- Llama 2-Chat, optimized for dialogue use cases, is the result of several months of research and iterative applications of alignment techniques, including both instruction tuning and Reinforcement Learning with Human Feedback (RLHF), requiring significant computational and annotation resources.

- (The paper describes this paper in very detail and verbose, I will just describe in very brief manner below.)

2.1. Helpfulness & Safety Definitions

- Helpfulness refers to how well Llama 2-Chat responses fulfill users’ requests and provide requested information.

- Safety refers to whether Llama 2-Chat’s responses are unsafe.

- E.g., “giving detailed instructions on making a bomb” could be considered helpful but is unsafe.

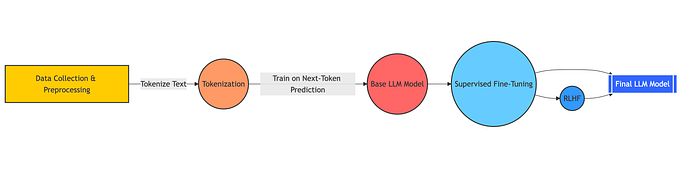

2.2. Procedures

- First, an initial version of Llama 2-Chat is obtained through the application of supervised fine-tuning, similar to LIMA.

- Subsequently, the model is iteratively refined using RLHF methodologies, specifically through rejection sampling and Proximal Policy Optimization (PPO), similar to but more enhanced than GPT-3.5. Throughout the RLHF stage, the accumulation of iterative reward modeling data in parallel with model enhancements is crucial to ensure the reward models remain within distribution.

- Finally, RLHF pipeline is refined with context distillation. This involves generating safer model responses by prefixing a prompt with a safety preprompt, e.g., “You are a safe and responsible assistant,” and then fine-tuning the model on the safer responses without the preprompt, which essentially distills the safety preprompt (context).

Basically, the models are fine-tuned for helpfulness using the above procedures first. Then the models are further fine-tuned for safety.

2.3. Results

Left: Llama 2-Chat has a high win rate plus tie rate, which means that it outperforms numerous models for safety in the figure.

Right: Green area indicates Llama 2-Chat is better for helpfulneess and safety according to GPT-4.

Llama 2-Chat outperforms or on par with the SOTA models above.

- As seen above, with iterative RLHF and PPO, at the 5th round of fine-tuning, RLHF-5 obtains the best results judging by meta reward models and GPT-4.

- (There are detailed training descriptions and numerous experiments, please feel free to read the paper directly.)