Brief Review — Gemini: A Family of Highly Capable Multimodal Models

Foundation Model or Large Multimodal Model (LMM) Accepting Textual, Visual, and Audio as Inputs

Gemini: A Family of Highly Capable Multimodal Models

Gemini, by Gemini Team, Google

2023 arXiv v1, Over 70 Citations (Sik-Ho Tsang @ Medium)Large Multimodal Model (LMM)

2017 … 2023 [GPT-4] [GPT-4V(ision)] [MultiModal-CoT] [CoCa]

==== My Other Paper Readings Are Also Over Here ====

- A new family of multimodal models, Gemini, is proposed that exhibit remarkable capabilities across image, audio, video, and text understanding.

- The Gemini family consists of Ultra, Pro, and Nano sizes.

- Gemini Ultra model advances the state of the art in 30 of 32 of these benchmarks — notably being the first model to achieve human-expert performance on the well-studied exam benchmark MMLU.

- According to Google Blog last week (08 Feb 2024), Google Bard is now known as Gemini.

Outline

- Gemini

- Text Understanding Results

- Image & Video Understanding Results

- Audio Understanding Results

- Responsible Deployment

1. Gemini

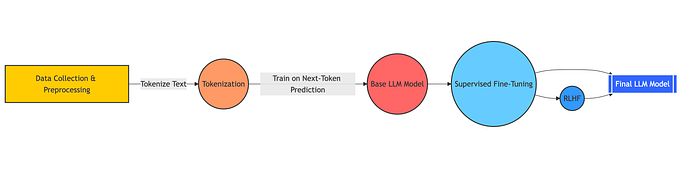

1.1. Model Architecture

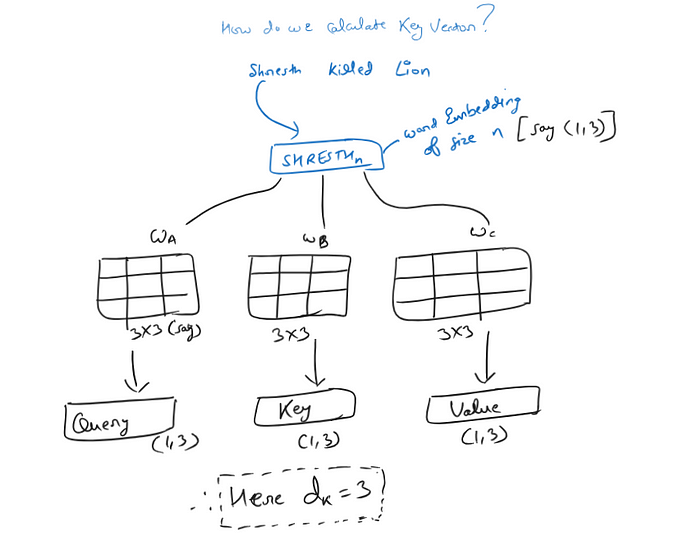

Gemini models build on top of Transformer decoders, enhanced with improvements in architecture and model optimization to enable stable training at scale and optimized inference on Google’s Tensor Processing Units (TPUs).

- They are trained to support 32k context length, employing efficient attention mechanisms.

- Gemini models are trained to accommodate textual input interleaved with a wide variety of audio and visual inputs, such as natural images, charts, screenshots, PDFs, and videos, and they can produce text and image outputs. The visual encoding of Gemini models is inspired by Flamingo, CoCa, and PaLI.

- Video understanding is accomplished by encoding the video as a sequence of frames in the large context window. Video frames or images can be interleaved naturally with text or audio as part of the model input. The models can handle variable input resolution.

- Gemini can directly ingest audio signals at 16kHz from Universal Speech Model (USM) (Zhang et al., 2023) features.

- (They do not expose their model & training details such as number of layers, number of hidden units, pretraining dataset size, and so on, similar to OpenAI’s GPT-4 or GPT-4V.)

1.2. Gemini Variants

4 sizes are supported: Ultra, Pro, Nano-1 and Nano-2.

- For the Pro model, the inherent scalability of the infrastructure and learning algorithms enable to complete pretraining in a matter of weeks, leveraging a fraction of the Ultra’s resources.

- The Nano series of models leverage additional advancements in distillation and training algorithms to produce the best-in-class small language models.

1.3. Some Training Details

- Gemini models are trained using TPUv5e and TPUv4.

Training Gemini Ultra used a large fleet of TPUv4 accelerators across multiple datacenters. This represents a significant increase in scale over the prior flagship model PaLM-2.

- The pretraining dataset uses data from web documents, books, and code, and includes image, audio, and video data.

2. Text Understanding Results

The performance of Gemini Pro outperforms inference-optimized models such as GPT-3.5 and performs comparably with several of the most capable models available, and Gemini Ultra outperforms all current models.

- e.g.: On MMLU, Gemini Ultra can outperform all existing models, achieving an accuracy of 90.04%. MMLU is a holistic exam benchmark, which measures knowledge across a set of 57 subjects. Human expert performance is gauged at 89.8% by the benchmark authors, and Gemini Ultra is the first model to exceed this threshold.

Gemini Ultra is the best model across the board for all six capabilities. Gemini Pro, the second-largest model in the Gemini family of models, is also quite competitive while being a lot more efficient to serve.

- (There are also multilingual results. Please read the paper directly if interested.)

3. Image & Video Understanding Results

3.1. Image Benchmarking

Again, Gemini Ultra is state of the art across a wide range of image-understanding benchmarks.

- MMMU (Yue et al., 2023) is a recently released evaluation benchmark, which consists of questions about images across 6 disciplines with multiple subjects within each discipline that require college-level knowledge to solve these questions.

Gemini Ultra achieves the best score on this benchmark advancing the state-of-the-art result by more than 5 percentage points and outperforms the previous best result in 5 of 6 disciplines.

- (There are also multilingual results. Please read the paper directly if interested.)

3.2. Illustrative Examples for Image

Using Gemini’s multimodal reasoning capabilities, the model is able to understand the messy handwriting, correctly understand the problem formulation, convert both the problem and solution to mathematical typesetting, identify the specific step of reasoning where the student went wrong in solving the problem, and then give a worked through correct solution to the problem.

- The model is required to solve the task of generating matplotlib code that would rearrange a set of subplots provided by the user.

The model output shows that it successfully solves this task combining multiple capabilities of understanding the user plot, inferring the code required to generate it, following user instructions to put subplots in their desired positions, and abstract reasoning about the output plot.

3.3. Video Benchmarking

Gemini Ultra achieves state-of-the-art results on various few-shot video captioning tasks as well as zero-shot video question answering tasks.

3.4. Illustrative Examples for Video

3.5. Image Generation

The model successfully generates an interleaved sequence of images and text with suggestions to create a cute green avocado with pink seed or a green bunny with pink ears from yarn.

4. Audio Understanding Results

4.1. Audio Benchmarking

Gemini Pro model significantly outperforms the USM and Whisper models across all ASR and AST tasks, both for English and multilingual test sets.

- Gemini Nano-1 model also outperforms both USM and Whisper on all datasets except FLEURS.

4.2. Illustrative Examples for Audio

4.3. Modality Combination

- The above table indicates a turn-by-turn interaction with the model, providing pictures and verbally asking questions about the next steps for cooking an omelet. It is noted that the model response text is reasonably accurate, and shows that model processes fine-grained image details to evaluate when the omelet is fully cooked.

5. Responsible Deployment

- Google follows a structured approach for responsible deployment in order to identify, measure, and manage foreseeable downstream societal impacts of the proposed models, in line with previous releases of Google’s AI technology (Kavukcuoglu et al., 2022).

- Throughout the lifecycle of the project, Google follows the structure above.

- (Please read the paper directly for more details.)