Review — Big Transfer (BiT): General Visual Representation Learning

Pretraining Using Large Model and Large Datasets, Better Performance for Downstream Tasks

Big Transfer (BiT): General Visual Representation Learning

BiT, by Google Research, Brain Team, Zürich, Switzerland

2020 ECCV, Over 300 Citations (Sik-Ho Tsang @ Medium)

- Pretraining is revisited.

- Big Transfer (BiT) is proposed to scale up pretraining.

Outline

- Big Transfer (BiT)

- Experimental Results

1. Big Transfer (BiT)

- Upstream components are those used during pre-training, and downstream are those used during fine-tuning to a new task.

1.1. Upstream Pre-Training

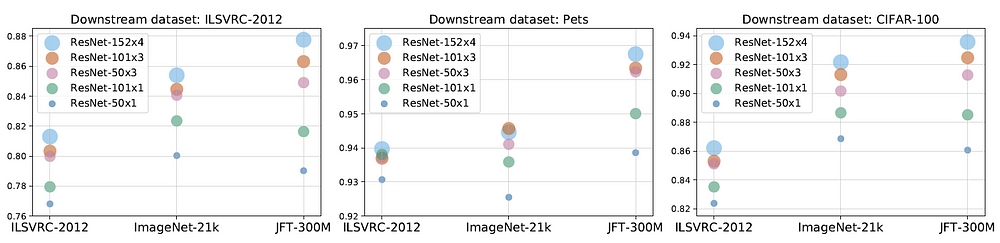

- The first component is scale. It is well-known in deep learning that larger networks perform better on their respective tasks. Further, it is recognized that larger datasets require larger architectures to realize benefits.

- The interplay between computational budget (training time), architecture size, and dataset size, is investigated.

For this, three BiT models are trained on three large datasets: ILSVRC-2012 [39] which contains 1.3M images (BiT-S), ImageNet-21k [6] which contains 14M images (BiT-M), and JFT [43] which contains 300M images (BiT-L).

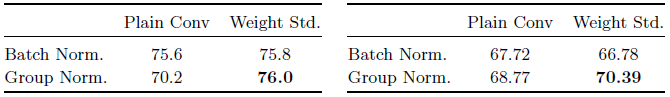

- The second component is Group Normalization (GN) [52] and Weight Standardization (WS) [28].

- BN is detrimental to Big Transfer for two reasons. First, when training large models with small per-device batches, BN performs poorly or incurs inter-device synchronization cost. Second, due to the requirement to update running statistics, BN is detrimental for transfer.

- GN, when combined with WS, has been shown to improve performance on small-batch training for ImageNet and COCO [28].

The combination of GN and WS is useful for training with large batch sizes, and has a significant impact on transfer learning.

1.2. Transfer to Downstream Tasks

- FixRes found that, it is common to scale up the resolution by a small factor at test time. As an alternative, one can add a step at which the trained model is fine-tuned to the test resolution [49]. The latter is well-suited for transfer learning; the resolution change is included during the fine-tuning step.

- Setting an appropriate schedule length, i.e. training longer for larger datasets, provides sufficient regularization.

- Fine-tuning is performed for downstream tasks.

2. Experimental Results

2.1. BiT Performance

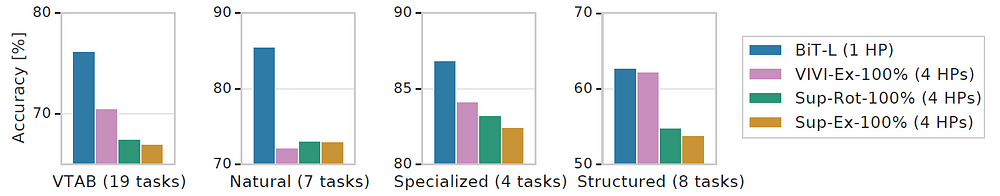

- Particularly, Visual Task Adaptation Benchmark (VTAB) [58] consists of 19 diverse visual tasks, each of which has 1000 training samples (VTAB-1k variant). The tasks are organized into three groups: natural, specialized and structured. The natural group of tasks contains classical datasets of natural images captured using standard cameras. The specialized group also contains images captured in the real world, but through specialist equipment, such as satellite or medical images. Finally, the structured tasks assess understanding of the the structure of a scene, and are mostly generated from synthetic environments, including object counting and 3D depth estimation.

BiT-L outperforms previously reported generalist SOTA models as well as, in many cases, the SOTA specialist models.

- BiT-M trained on ImageNet-21k leads to substantially improved visual representations compared to the same model trained on ILSVRC-2012 (BiT-S).

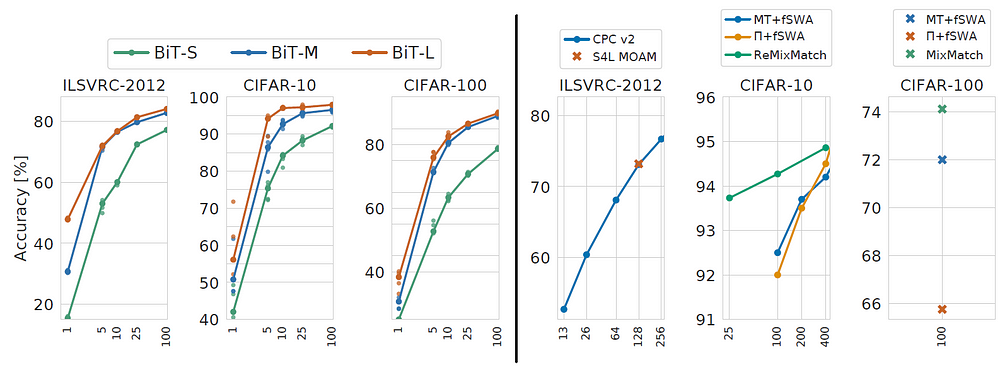

2.2. Tasks with Few Datapoints

- The number of downstream labeled samples is studied.

Even with very few samples per class, BiT-L demonstrates strong performance and quickly approaches performance of the full-data regime.

- BiT uses extra labelled out-of-domain data, whereas semi-supervised learning uses extra unlabelled in-domain data.

BiT outperforms SOTA semi-supervised learning method.

BiT is the best on natural, specialized and structured tasks.

2.3. Object Detection

RetinaNet models with pre-trained BiT backbones outperforms standard ImageNet pre-trained models.

2.4. Further Analysis

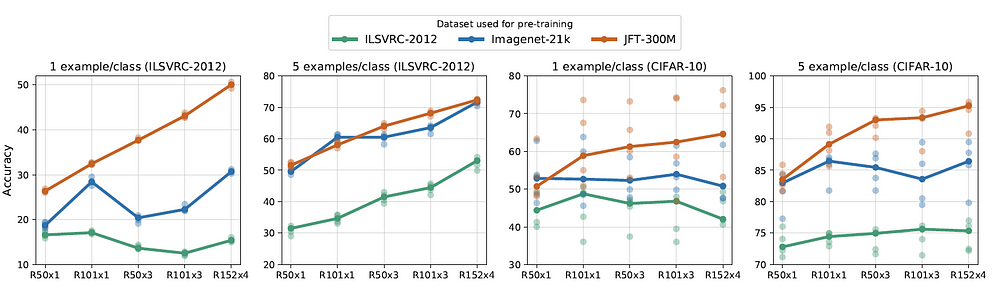

- The general consensus is that larger neural networks result in better performance. With larger architectures, models pre-trained on JFT-300M significantly outperform those pre-trained on ILSVRC-2012 or ImageNet-21k.

- In the extreme case of one example per class, larger architectures outperform smaller ones when pre-trained on large upstream data.

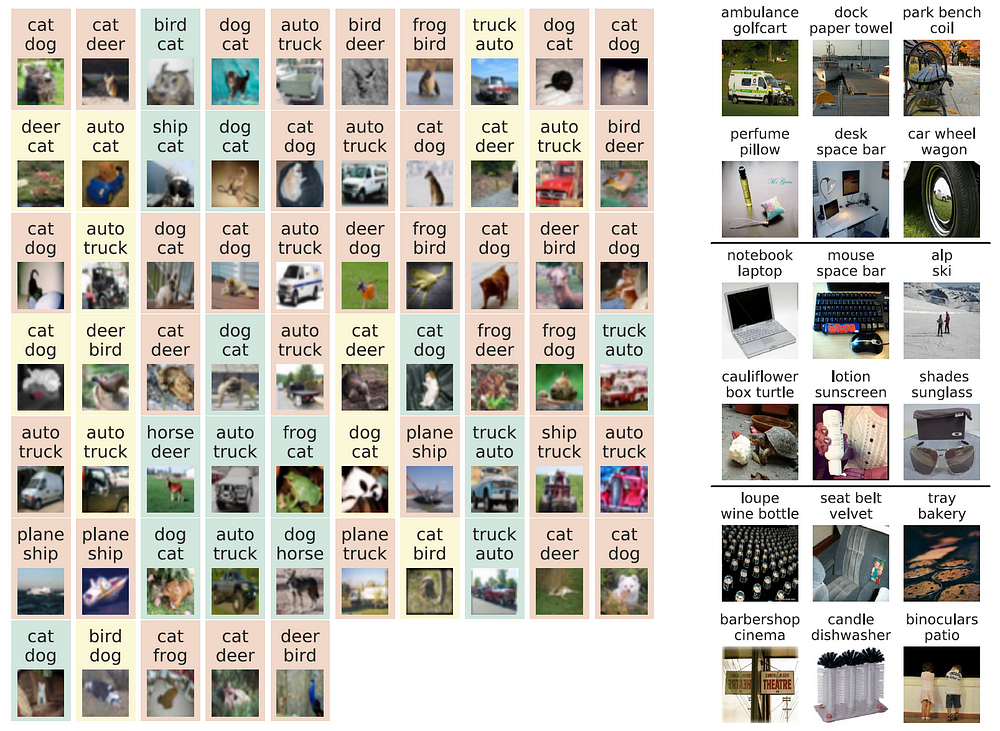

- Left: All mistakes on CIFAR-10, colored by whether 5 human raters agreed with BiT-L’s prediction (green), with the ground-truth label (red) or were unsure or disagreed with both (yellow).

- Right: Selected representative mistakes of BiT-L on ILSVRC-2012.

- In many cases, we see that these label/prediction mismatches are not true ‘mistakes’: the prediction is valid, but it does not match the label.

- There are also cases of label noise, where the model’s prediction is a better fit than the ground-truth label.

- Overall, by inspecting these mistakes, it is observed that performance on the standard vision benchmarks seems to approach a saturation point.

Reference

[2020 ECCV] [BiT]

Big Transfer (BiT): General Visual Representation Learning

Image Classification

1989–2018 … 2019: [ResNet-38] [AmoebaNet] [ESPNetv2] [MnasNet] [Single-Path NAS] [DARTS] [ProxylessNAS] [MobileNetV3] [FBNet] [ShakeDrop] [CutMix] [MixConv] [EfficientNet] [ABN] [SKNet] [CB Loss] [AutoAugment, AA] [BagNet] [Stylized-ImageNet] [FixRes] [Ramachandran’s NeurIPS’19] [SE-WRN] [SGELU]

2020: [Random Erasing (RE)] [SAOL] [AdderNet] [FixEfficientNet] [BiT]

2021: [Learned Resizer]